Navigating the Hex Universe

Project:

Date:

Team:

Keywords:

A Reinforcement Learning Exploration with Unity ML-Agents

DigitalFUTURES 2022 Workshop

2022.7

Biru Cao, Maoran Sun

Reinforcement Learning, Unity

This project applies reinforcement learning in the vision of a meta-universe and virtual metropolis. A robot is places in the space which constitutes of debris in hexagons. Its goal is to void traps while reaching the targets. We completed a reinforcement learning-based agent model training in Unity. By training a reinforcement learning agent in a grid-based environment using Unity’s ML-Agents toolkit, the agent navigates a grid populated with targets and traps.

Game Environment

Action: move to one of the six juxtaposed hexagons or stay still

Reward: reach target(+1), reach trap(-1), visit a location not the first time( 0.01), pass the boundary(-0.1)

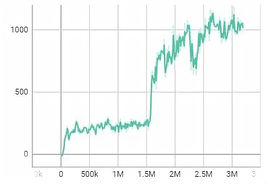

The environment consists of a grid with a specified number of rows and columns. The agent, targets, and traps are placed on this grid. The positions of the targets and traps are randomly generated at the start of each episode to ensure a diverse range of experiences for the agent. The agent is trained using a policy that is updated based on the rewards it receives. The training process involves balancing exploration (trying out new actions) and exploitation (choosing the best-known actions). The agent’s performance is evaluated based on the mean reward over a series of episodes.

Agent and environment

Training

The project aims to demonstrate the capabilities of reinforcement learning in game-like environments and explore strategies for reward shaping and policy optimization.

The agent is rewarded based on its actions: The agent receives a reward of +1 for reaching a target. The agent is penalized with a reward of -0.5 for reaching a trap. The agent is penalized with a reward of -0.01 for staying still. The agent receives a reward of +0.01 for moving closer to a target.

The current reward structure tries to encourage more exploration so the agent don’t stay still to avoid the traps. In multiple rounds of tests, we increased the penalty for staying still and provide a small reward for every step taken towards a target.

Reinforcement learning framework

2 Targets and 2 traps

1 Target and 1 trap

1 Target and 1 trap with fixed locations

Results

Reinforcement learning framework

Agent behaviors

_edited.png)